If you’re in the market for Business analytics software, the allure of new technologies can be addictive and pull you down a rabbit hole of temptation. Tread carefully, for there’s many a slip between the cup and the lip.

Being hasty in software selection can land you in hot soup if the new system fails to deliver. Learn what to expect from the latest software trends with an in-depth discussion about the future of business analytics.

Compare Top Business Analytics Software Leaders

What This Article Covers

- Key Takeaways

- Top Business Analytics Trends

- Next Steps

Key Takeaways

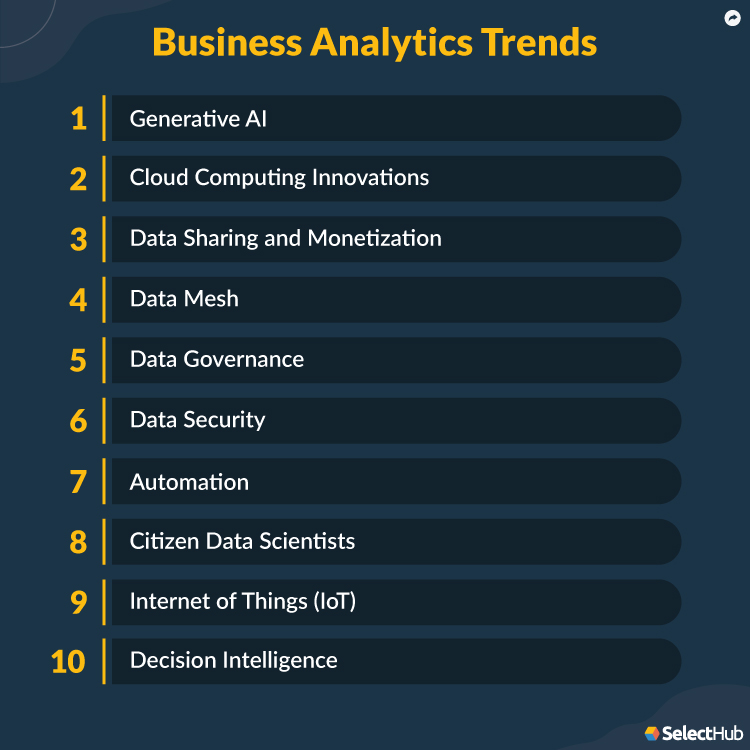

- Among business analytics trends, generative AI will drive data democratization and the need for strict usage guidelines.

- Cloud computing costs will drive the quest for cost-effective querying techniques.

- Data sharing will continue to break down information silos.

- The data mesh will provide a viable solution to managing data at scale.

- Data governance will remain a priority.

- Data privacy and security concerns will be top trends in business analytics.

- Citizen data scientists will take over analyst roles, but specialized skills will be in demand.

- Thanks to automation, systems will deploy faster and scale flexibly.

- Internet of Things (IoT) data security and costs will continue to be an improvement area for software vendors.

- Decision intelligence will reduce the gaps in decision-making by combining the social sciences with AI-ML.

Top Trends for 2024

1. Generative AI

Despite data democratization, less than half of company executives can use data software independently. The calculations are too complex, building dashboards and reports is too technical, and not everyone has the required skills.

Generative AI is born out of the need to address this gap. And organizations have taken to it like fish to water, as this McKinsey Global Survey confirms.

40% of survey respondents said their organizations would increase investment in AI because of the promise generative AI shows.

As part of gen(erative) AI, LLMs (large-language models) are deep learning algorithms that perform several natural language tasks. They train by taking input and iteratively predicting the next word.

What’s Next?

Early instances of LLM usage have had mixed results, and the jury’s still out on where it fits into business processes. Naysayers were quick to dismiss AI as unreliable and out of control. Media reports about leaked company data didn’t help.

But the industry isn’t ready to give up yet, and those who can are building LLM models in-house. Besides, organizations with embedded AI already have a head start.

- ThoughtSpot is among the early adopters, building Gen AI on top of its pre-existing LLM model.

- Qlik introduced OpenAI connectors to enhance AI-ML and NLP (natural language processing) capabilities.

- Independent software vendors (ISVs) like Salesforce provide generative AI in applications by partnering with AWS.

Building LLM models for specific verticals is a trend to watch out for. Bloomberg built an LLM model dedicated to promoting NLP in the financial industry. There is already a noticeable shift toward reskilling across the industry.

2. Cloud Computing Innovations

Cloud verticals continue to be in demand, bringing the versatility of the cloud to industry-specific solutions. Oracle Fusion Analytics provides ready-to-go ERP, HCM, sales, finance and supply chain management solutions.

- Qlik and Power BI offer app-building modules — you can design and publish apps for specific tasks, and the whole team benefits.

- Urban Outfitters uses over 240 Qlik apps for tracking eCommerce sales, store performance, operations and supply chain management.

As Marijan Nedic, Vice President and Head of IT Business Solutions, SAP, said:

I believe what separates you from your competitors is not the majority of our operations; it’s the 5-to-10% of your operations that are unique.” Source

What’s Next?

According to Allied Market Research, the cloud services market will reach $2.5 trillion in 2031. But real-time analytics isn’t affordable with the high costs of live querying.

Cloud subscriptions are usage-based — you pay for what you use. It includes the number of queries, allocated RAM, concurrency, data volume and server hardware.

- Some vendors offer in-database analysis to avoid moving data.

- TIBCO provides usage reports to identify the peak consumption times so you can scale back on low-priority queries during that time.

- Restricting data access based on predetermined usage quotas or roles is another way to keep query volumes in check.

We can expect a continued vendor focus on providing cost-effective querying in the future.

3. Data Sharing and Monetization

A data fabric is an information-sharing architecture across systems. Data marketplaces are the first step to making it happen — allow enterprises to buy, sell and exchange data within GDPR.

You don’t need to provision additional hardware or databases; modern processing technologies allow processing data even in encrypted form.

Monetizing data in this manner benefits companies and the end user.

- It gives companies much-needed customer insights to work with so they can innovate and improve their products to sell better.

- Regulated industries like finance, healthcare and insurance can gain comprehensive information on patients, loan applicants and other customers.

- Snowflake provides data clean rooms where participants can securely combine proprietary data with 3rd party data.

- John Deere sells data from sensors installed in their tractors back to farmers.

- Skywise is an aviation-focused platform that provides access to data from over 100 airlines and spare part suppliers. It helps identify defect patterns, forecast machinery failures, and optimize parts replacement.

What’s Next?

It’s hard work. Making data securely available to other entities and processing incoming data that’s probably encrypted can seem like an additional burden to busy teams.

Besides, not everyone is on board. Family-owned businesses and companies with established data practices are reluctant to expose their information externally.

The demand for software that can encrypt and anonymize data for sharing will rise. Staying in silos helps no one, and the only way forward is to move ahead with guardrails and consistent security assessment.

4. Data Mesh

Data management, the linchpin of BI analytics, continues to grapple with volume and complexity challenges. Operational data serves current transactional business needs, while analytical data provides historical and future insights.

Manually handling the two can slow down centralized data teams.

Enter the data mesh — a new concept among trends in business analytics that recognizes separate data domains. It decentralizes control from a single data team at the center to individual units that handle separate data products.

According to the founder of the data mesh, Zhamak Dehghani,

Data mesh, at core, is founded in decentralization and distribution of responsibility to people who are closest to the data in order to support continuous change and scalability.” Source

Autodesk, a design and engineering software vendor, partners with Atlan to drive a modern BI platform using the data mesh concept. They have 60 domain teams with complete visibility into how end users consume their data.

Each team has complete autonomy to ingest, process and publish results for consumers for that data domain. Instead of data engineers working in silos, each team has data engineers and product owners complementing each other.

What’s Next?

Assigning high-performing teams to separate data products allows serving multiple users with unique needs. However, the data mesh might not be for everyone, so please consider your requirements before going all in.

Cross-functional teams working together are excellent, but it might surface skills gaps that need to be addressed, like metadata management. The data mesh might need upskilling across teams.

5. Data Governance

Data governance involves defining the policies, procedures and standards for managing data according to your organization’s strategic goals and compliance requirements.

They include data ownership, quality standards, data lifecycle management and compliance with regulations. Governance protocols support data security, keeping information safe from malicious intent and inadvertent modifications.

What’s Next?

Despite AI-driven automation, enforcing governance at scale is challenging. How much control is too much? Who should get access and to which information? How can we share data while keeping it safe?

Software that helps answer the above questions with built-in access control and meets compliance requirements will be in demand.

Additionally, organizations need smooth consent workflows, efficient data mapping and AI-driven risk assessment.

6. Data Security

Insider security breaches are more costly and cut deeper regarding the exposed data than external hackers, and oversight is challenging.

According to the latest IBM Cost of a Data Breach (gated) Report, 82% of data breaches in the March 2022-23 period involved cloud data, and 39% of the total data breaches incurred an above-average cost of USD 4.75 million.

Firewalls and zero-trust protocols are like a drop in the ocean. Securing data at internet endpoints for edge computing comes with its challenges.

GDPR sets legal requirements for data security and governance. Organizations must secure personal data from breaches, unauthorized access and other security-related incidents with technical and organization-wide measures.

At the same time, they need to give users access to their data on demand and open themselves up to security audits.

What’s Next?

As hackers become more innovative and users become more aware, regulations will become stricter. Big data volumes demand an engineering-first approach to customize security features for your organization.

AI data governance poses fresh challenges. Can AI support cybersecurity? How can we rein in AI? It’s like giving a kid the keys to the candy store.

Security and AI: AI Trust, Risk and Security Management (AI-TRiSM)

AI algorithms can extract information easily, which makes data leaks more probable.

Pre-existing errors will likely multiply with AI’s self-learning technology. Besides, AI isn’t free from race, gender and socio-economic biases.

Where’s the trust?

How can enterprises use AI with confidence? AI trust, risk and security management (AI-TRiSM) is an evolving framework pushing for AI access governance, reliability and data protection.

AI for Security: The Good It Can Do

AI algorithms help mitigate risk as they’re pre-programmed to identify fraud and remove malware, botnets and spam content. Automatic risk assessment and user authentication help deploy patches when something goes wrong.

Machine learning programs have the memory to record network behavior for disaster recovery.

Enterprises will seek advanced risk assessment technologies for AI in business analytics platforms.

7. Automation

Vendors provide automation code for individual tasks and entire infrastructures, allowing you to deploy whole systems with a few clicks. Automation does what humans can’t — it drives consistent, audited action and reduces errors.

Automated tasks include system administration, monitoring, task reviews and approvals, database management, integration, systems management, and OS patching.

Automation at the system level includes the management of source connections, networks, computing and storage.

It drives software-as-a-service (SaaS), providing scalable and elastic cloud computing. Oracle Autonomous Database scales automatically.

What’s Next?

Legacy systems and manually configured components can block automation efforts. A dedicated data team can lessen onboarding pangs by encouraging and enforcing adoption.

8. Citizen Data Scientists

According to Statista, the market size of the BI and analytics software industry will likely grow to over $18 billion by 2026. It was $15.3 billion in 2021.

This slow and steady march is understandable, considering we’ve been through a pandemic and an uncertain market landscape. Happily, progress might slow down but never stops.

The demand for self-service BI, data visualization and fast insight drives the upward trend in the analytics software market. Data democratization is a significant driver as enterprises look for ways to accelerate their go-to-market strategies.

As citizens become developers, automation enables them to build and run workflows and package them into reusable apps.

Will data analysts become redundant?

What’s Next?

Shaku Atre, keynote speaker and president of Atre Group Inc., said quite the opposite: There will be more data scientists as time passes.

If anything there will be an increase in data scientists. It is possible that it may come in additional flavors with the increase in additional features with Artificial Intelligence.

The current “catch all” term being “Artificial Intelligence” it is highly likely that additional flavors for additional functions provided may be reflected as “Data Scientist for Artificial Intelligence”, “Data Derivator for Artificial Intelligence.”

Software is getting more sophisticated, but it is nowhere near the human brain’s inference drawing capabilities. In order for the software to have that capability, it will need more data and not only more high-level data, but more granular data.

And that is where a data scientist is absolutely needed. People have been naïve to think that new technology is going to solve all the problems in no time. It has never happened. And, I think, it will never happen.”

Mike Galbraith, Vice President of Technology Strategy & Solutions at ThoughtFocus, echoed Atre’s sentiments and added his take.

Data departments will become redundant as data proliferation continues, but data scientists themselves aren’t going away.

“Yes, I do think data scientists and other dedicated roles in the business analytics area will continue to be prevalent in the coming year, but that trend for dedicated teams and roles will start to tail off as automation technologies and AI services evolve, as companies begin to get a handle on the data flowing in and around their businesses and as skills and methods within organizations mature.”

According to Galbraith, as self-service BI becomes the norm, the role of data specialists will change.

“The specialist titles will become more generalized as the roles and skills become more pervasive within the business function and a companies organization become more data-driven. For instance, as organizations transform to become more data-driven and their cultures change to institutionalize a more a digital way of doing business, business analysts in particular functional areas, whether in finance or supply chain or business development or manufacturing will soon have a good grasp on the technical tools and methods that make up the foundation of data analytics.”

Ryan Wilson, Vice President of Technology at Signal Ventures LLC, emphasized this.

“What we’re starting to see is very business user-friendly business intelligence platforms that can be highly automated and are starting to incorporate some data science tools that don’t require a data scientist with a Ph.D. to utilize.”

Wilson echoed Galbraith and Atre’s thoughts on self-service BI, saying that more non-technical employees will assume analyst roles.

“This is going to lead to more and more companies incorporating data-driven business at every level of the business. As this happens I think we’ll start to see everyone becoming a bit of an analyst which will start to shift the role of a dedicated analyst to running, maintaining, and extending these platforms and tools in most organizations.”

Compare Top Business Analytics Software Leaders

9. Internet of Things (IoT)

The Internet of Things (IoT) includes connectivity to smart home devices, sensors, chatbots, digital assistants and heavy equipment.

Data from these devices is part of business intelligence for vendors and end users, whether it streams live into your systems or is processed at internet endpoints like mobile devices and smartwatches.

Besides, many cloud verticals pull data from off-premises, internet-connected devices that use their applications.

Manufacturing, supply chain, transportation, marketing and sales management departments have staff, machinery and vehicles in the field, generating and collecting business-critical data.

Predictive maintenance involves monitoring offsite equipment, anticipating wear and tear, and planning repairs and features high on the requirements checklist of businesses with field equipment and vehicles.

According to Verified Market Research, the global predictive maintenance market will likely reach $49.54 billion by 2030.

What’s Next?

Edge analytics is a power-saving alternative to traditional data systems that move data to local data stores before analysis. It allows the processing of data within the source device and uploading it to the central database in small packets.

Many transactional applications, like mobile banking apps, process data at the edge.

Capturing data from streaming and edge devices requires advanced technologies that consume power. Recording real-time data with techniques like change data capture (CDC) while the data is in transit is resource-intensive and costly.

Besides, there’s the issue of data security. The internet and edge devices are all potential touch points outside your company ecosystem and prone to security breaches.

Making IoT analytics affordable and securing data at the edge will be on the minds of software vendors and product owners in the coming years.

10. Decision Intelligence

Gartner defined decision intelligence as incorporating “traditional non-deterministic techniques” into analytics. It augments decision-making with NLP, AI-ML and automation.

Decision intelligence combines decision science — psychology, neuroscience and economics — with data science. It closes the gap between insights and decisions by mitigating delays in the decision cycle.

How? You must define the decision pathways unique to your organization at the onset. Giving the system the decision map and linking your dataset can generate more accurate results.

A lot of it has to do with AI, machine learning, and social sciences. Watch this YouTube video of low–code decision intelligence with Oracle Analytics.

What’s Next?

This business analytics trend has caught the attention of leading vendors and developers. Google launched its decision engineering lab in 2018, and Alibaba recently followed suit.

Very few software offers this capability yet, and the technology is in its nascent stages, but we can expect exciting developments in the coming years.

Software Considerations

You need a data visualization tool to perform quick and intuitive data exploration and present the results to non-technical audiences.

A BI tool provides scalable, comprehensive reporting and analysis of historical and current data. A predictive analytics tool can help you pivot with the market and create a realistic roadmap. Which one will work best for you?

Software selection can be nerve-wracking, but being mindful of your requirements can help you choose wisely.

Follow our Lean Selection Methodology to get the best returns on your software investment. Or talk to us to match products to your unique business needs.

Compare Top Business Analytics Software Leaders

Next Steps

Data democratization puts security and data privacy at a premium, and with generative AI integrations increasing, developing AI-related guardrails will keep vendors busy.

Cloud verticals, data marketplaces and automation change how enterprises set up their tech stack. Citizen developers are the new data analysts, though specialized data skills will continue to be in demand.

Decision intelligence is a trend to watch out for, focusing on augmenting critical decisions with data science technologies.

Are you looking for business analytics software?

Analyze your preferred software with our free, customizable comparison report, matched to your company size. Learn about their technical and functional capabilities and vendor qualifications with a handy, downloadable scorecard.

What do you think of the future of business analytics? Which trend will likely take center stage in the coming year? Let us know in the comments.

Contributing Thought Leaders

Shaku Atre is president of Atre Group, Inc., New York City, NY and Santa Cruz, California, a BI and data warehousing corporation.

Atre is an acclaimed author, and her books include DataBase: Structured Techniques for Design, Performance and Management and Business Intelligence Roadmap: The Complete Project Lifecycle for Decision-Support Applications.

She’s an accomplished speaker, addressing audiences in the USA, Canada, Europe, South America, Asia and Australia on business intelligence, data warehousing, data mining, customer relationship management (CRM) and database technology.

Hundreds of her articles have been published in trade publications over the years.

Mike Galbraith serves as the Chief Digital Innovation Officer (CDIO) at the U.S. Department of the Navy. In his career of 30 years, he has been an IT executive, CIO, digital transformation leader and delivery leader for Fortune 200 companies.

He’s an expert in global IT strategy, enterprise architecture, delivery and operations, digital transformation, ERP systems, IoT, big data and analytics.

His accomplishments include degrees in computer science, business administration and information management. He’s a Wharton School alum with executive leadership and corporate strategy certifications.

Ryan Wilson is Vice President of Technology at Signal Ventures LLC. An experienced data analyst, Ryan built dataflows, dashboards, and cards for over 20 companies as a Domo consultant with Build Intelligence.

He led a team of developers and consultants in maintaining over 3200 visualizations, 2400 datasets and 700 dataflows.